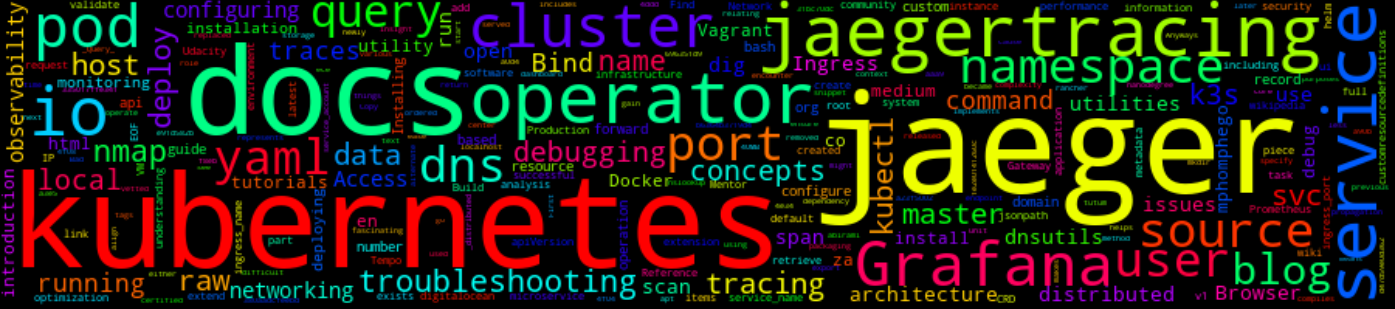

How To Configure Jaeger Data Source On Grafana And Debug Network Issues With Bind-utilities.

11 Min Read

The Story

As a mentor for a Udacity nanodegree, I realized that most students had difficulties adding Jaeger tracing data source on Grafana & Prometheus running in a Kubernetes cluster.

According to the docs:

Jaeger is a distributed tracing system released as open source by Uber Technologies. It is used for monitoring and troubleshooting microservices-based distributed systems, including distributed context propagation, distributed transaction monitoring, root cause analysis, service dependency analysis and performance/latency optimization

At this point, one might be wondering what distributed tracing is?

An understanding of application behaviour can be a fascinating task in a microservice architecture. This is because incoming requests may cover several services, and on this request, each intermittent service may have one or more operations. This makes it more difficult and requires more time to resolve problems.

Distributed tracking helps gain insight into each process and identifies failure regions caused by poor performance.

I, therefore, decided to document this guide below which takes you through the installation of Jaeger to incorporate it into Grafana and troubleshooting.

Note: This post will not be about using Jaeger for distributed tracing and backend/frontend application performance/latency optimization. If that’s something that interests you then check out this post very useful.

Note: This post assumes that:

- You are familiar with Kubernetes

- You have a running Kubernetes cluster and,

- You have already installed Grafana and Prometheus on the cluster

If not, refer to a previous post on how to install Prometheus & Grafana using Helm 3 on Kubernetes cluster running on Vagrant VM

The Walk-through

This section is divided into 4 parts:

- How To Configure Jaeger Data Source On Grafana And Debug Network Issues With Bind-utilities.

- The Story

- Reference

Installing Jaeger Operator on Kubernetes

First, we will need to install Jaeger Operator.

The Jaeger Operator is an implementation of a Kubernetes Operator. Operators are pieces of software that ease the operational complexity of running another piece of software. More technically, Operators are a method of packaging, deploying, and managing a Kubernetes application.

The command below will create the observability namespace and install the Jaeger Operator (CRD for apiVersion: jaegertracing.io/v1) in the same namespace.

export namespace=observability

export jaeger_version=v1.28.0

kubectl create namespace ${namespace}

kubectl create -f https://raw.githubusercontent.com/jaegertracing/jaeger-operator/${jaeger_version}/deploy/crds/jaegertracing.io_jaegers_crd.yaml

kubectl create -n ${namespace} -f https://raw.githubusercontent.com/jaegertracing/jaeger-operator/${jaeger_version}/deploy/service_account.yaml

kubectl create -n ${namespace} -f https://raw.githubusercontent.com/jaegertracing/jaeger-operator/${jaeger_version}/deploy/role.yaml

kubectl create -n ${namespace} -f https://raw.githubusercontent.com/jaegertracing/jaeger-operator/${jaeger_version}/deploy/role_binding.yaml

kubectl create -n ${namespace} -f https://raw.githubusercontent.com/jaegertracing/jaeger-operator/${jaeger_version}/deploy/operator.yaml

kubectl create -f https://raw.githubusercontent.com/jaegertracing/jaeger-operator/${jaeger_version}/deploy/cluster_role.yaml

kubectl create -f https://raw.githubusercontent.com/jaegertracing/jaeger-operator/${jaeger_version}/deploy/cluster_role_binding.yaml

Once we have created the jaeger-operator deployment, we need to create a Jaeger instance, see snippet below:

mkdir -p jaeger-tracing

cat >> jaeger-tracing/jaeger.yaml <<EOF

apiVersion: jaegertracing.io/v1

kind: Jaeger

metadata:

name: my-traces

namespace: ${namespace}

EOF

kubectl apply -n ${namespace} -f jaeger-tracing/jaeger.yaml

Once the Jaeger instance named my-traces has been created, we can verify that pods and services are running successfully by running:

kubectl get -n ${namespace} pods,svc

The Jaeger UI is served via the Ingress.

An Ingress exposes HTTP and HTTPS routes from outside the cluster to services within the cluster. We can verify that an ingress service exists, by running:

kubectl get -n ${namespace} ingress -o yaml | tail

Note: The service name and port number will be useful later when setting up data sources on Grafana.

Access Jaeger UI on Browser

(for testing purposes) we can port-forward it such that we access it on our localhost host by running the command:

kubectl port-forward -n ${namespace} \

$(kubectl get pods -n ${namespace} -l=app="jaeger" -o name) --address 0.0.0.0 16686:16686

Then on our browser, we can access the Jaeger UI to validate the installation was successful.

Configuring Jaeger Data Source on Grafana

To configure Jaeger as a data source, we need to retrieve the Jaeger query service name as this will be used to query a DNS record for Kubernetes service and port.

Query is a service that retrieves traces from storage and hosts a UI to display them.

According to Kubernetes docs:

Every Service defined in the cluster (including the DNS server itself) is assigned a DNS name. By default, a client Pod’s DNS search list includes the Pod’s namespace and the cluster’s default domain.

We can retrieve a full DNS name for the Jaeger Query endpoint which we will use as our data source URL on Grafana

According to Kubernetes docs:

A DNS query may return different results based on the namespace of the pod making it. DNS queries that don’t specify a namespace are limited to the pod’s namespace. Access services in other namespaces by specifying them in the DNS query.

The code below compiles the DNS for the Jaeger query service which exists in the observability namespace running on a local cluster.

In Kubernetes, a Service is an abstraction that defines a logical set of Pods and a policy by which to access them (sometimes this pattern is called a micro-service).

Notice, the pattern <service_name>.<namespace>.svc.cluster.local

ingress_name=$(kubectl get -n ${namespace} ingress -o jsonpath='{.items[0].metadata.name}'); \

ingress_port=$(kubectl get -n ${namespace} ingress -o jsonpath='{.items[0].spec.defaultBackend.service.port.number}'); \

echo -e "\n\n${ingress_name}.${namespace}.svc.cluster.local:${ingress_port}"

Copy the echoed URL (including port number) above and open Grafana UI to add the data source, ensure that the link is successful by selecting save&test.

Should you encounter an error “Jaeger: Bad Gateway. 502. Bad Gateway” or similar go to debugging and troubleshooting

The image below shows a successful integration, where we can query Jaeger Span traces on Grafana.

A span represents a logical unit of work in Jaeger that has an operation name, the start time of the operation, and the duration. Spans may be nested and ordered to model causal relationships.

Debugging and Troubleshooting

- Jaeger docs contain a list of commonly encountered issues, hit this link for more information.

- If your issues are relating to DNS. Please ensure that

kube-dnsis running, all Service objects have an in-cluster DNS name of<service_name>.<namespace>.svc.cluster.localso all other things would address your<service_name>in the<namespace>.

- For the next task, we will need to run a Docker container in our cluster which provides a list of useful BIND utilities such as

dig,hostandnslookupwithin the cluster.

After a few Google searches, I found this popular container below and decided to use it for my debugging after I’ve investigated and vetted it for any malicious packages.

Read more about how to harden the security of your Docker environment

Running the command below will invoke a bash shell on the newly created pod-based of the dnsutils Docker image:

vagrant@dashboard:~> kubectl run dnsutils --image tutum/dnsutils -ti -- bash

Note: I am running k3s on a Vagrant box. In the case, you are not familiar with k3s,

K3s, is designed to be a single binary of less than 40MB that completely implements the Kubernetes API. To achieve this, they removed a lot of extra drivers that didn’t need to be part of the core and are easily replaced with add-ons.

K3s is a fully CNCF (Cloud Native Computing Foundation) certified Kubernetes offering. This means that you can write your YAML to operate against a regular “full-fat” Kubernetes, and they’ll also apply against a k3s cluster.

Anyways, let’s not get side-tracked. If you have used Docker before, think of kubectl run as an alternate docker run; it creates and runs a particular image in a pod.

The commands below will query various DNS records using dig (Domain Information Groper) utility, which will return a list of IP addresses of the A Record which exists on the Kubernetes domain (*.*.svc.cluster.local), then print the full hostname to STDOUT records that contain observability namespace.

root@dnsutils:/# namespace=observability

root@dnsutils:/# for IP in $(dig +short *.*.svc.cluster.local); do

HOSTS=$(host $IP)

if grep -q "${namespace}" <<< "$HOSTS"; then

echo "${HOSTS}";

fi;

done

Below is an image, highlighting the hostname of a particular service of interest, which is my-traces-query.observability.svc.cluster.local

Then investigate the port: 16686 on the hostname if it’s up by using nmap utility. But since nmap doesn’t come preinstalled in the container then we can manually install it.

root@dnsutils:/# apt update -qq && apt install -y nmap

After we have installed the utility we can scan the port that Jaeger query should be running on as shown in Configuring Jaeger Data Source on Grafana.

root@dnsutils:/# nmap -p 16686 my-traces-query.observability.svc.cluster.local

The image below shows that port 16686 is open and running this validates that we can access the Jaeger query either via the UI or as a Grafana data source.

I will try to update this post with new ways to debug as I find my ways around Kubernetes, Jaeger and Grafana.

If you have any suggestions, leave a comment below and we will get in touch.

Reference

- Grafana Data Sources

- Jaeger: Operator for Kubernetes

- What are Custom Resource Definitions?

- Tracing in Grafana with Tempo and Jaeger

- A Guide to Deploying Jaeger on Kubernetes in Production

- Distributed Tracing with Jaeger on Kubernetes

- Build a monitoring infrastructure for your Jaeger installation

- How To Implement Distributed Tracing with Jaeger on Kubernetes

- An Introduction to the Kubernetes DNS Service

- Kubernetes DNS for Services and Pods

- How To Use Nmap to Scan for Open Ports

- How to Scan & Find All Open Ports with Nmap